CEPH

Ceph Overview

Ceph is a highly scalable distributed storage solution that provides object, block, and file storage in a unified system. Ceph uses distributed nodes and data replication to provide high availability and redundancy, making it a popular choice for cloud storage solutions.

Ceph consists of several key components:

- Ceph Monitor (MON): Tracks the cluster state and maintains the map of the cluster.

- Ceph Manager (MGR): Provides additional monitoring and administrative functionalities.

- Object Storage Daemons (OSD): Handle data storage, data replication, recovery, and rebalancing.

- Ceph Metadata Server (MDS): Manages metadata for the Ceph file system (CephFS), allowing POSIX-compliant file system operations.

- RADOS Gateway (RGW): Provides an object storage interface compatible with Amazon S3 and OpenStack Swift.

Minimum Requirements for Ceph

- Hardware:

- CPU: At least 2 cores per OSD daemon.

- RAM: Minimum of 2 GB of RAM per OSD (4 GB per OSD recommended for better performance).

- Storage: SSD or HDD for OSD storage. An SSD is recommended for the Ceph MON.

- Network: 1 Gbps network interface card (NIC) per storage node. 10 Gbps is preferred for production.

- Software:

- Operating System: Linux distributions like Ubuntu, CentOS, or RHEL.

- Ceph Version: Latest stable release (e.g., Pacific, Quincy).

- Dependencies: Python, Ceph packages, system libraries like

libboost.

Recommended Matrix for Optimal Performance

For optimal performance in a Ceph cluster, consider the following:

- Cluster Size:

- Start with at least 3 MON nodes and 3 OSD nodes to ensure redundancy and fault tolerance.

- OSD per Node: Each node should have multiple OSDs depending on the storage capacity and performance needs. A good starting point is 6-12 OSDs per node.

- Network:

- 10 Gbps network for internal cluster traffic.

- Dedicated network for Ceph public and private traffic to avoid congestion.

- CPU and RAM:

- OSD Nodes: 2-4 cores per OSD, and 4-8 GB of RAM per OSD.

- MON Nodes: Minimum of 4 cores and 8 GB of RAM per MON.

- MDS Nodes: For CephFS, at least 4 cores and 16 GB of RAM per MDS, more for higher metadata performance.

- Storage Configuration:

- Use SSDs for the journal (BlueStore) and HDDs or SSDs for OSDs depending on workload.

- Ensure proper replication settings (3x replication is the default for fault tolerance).

Working Example

Here’s an example setup for a small to medium-sized Ceph cluster:

- Cluster Nodes: 6 nodes

- OSD Nodes: 3 nodes with 6 OSDs per node (total 18 OSDs).

- OSD Configuration: Each node has 1 SSD for journaling and 6 HDDs (or SSDs) for storage.

- MON Nodes: 3 nodes with MON and MGR daemons running on them.

- MON Configuration: Each MON node has 1 SSD for metadata storage and 8 GB of RAM.

- Network: 10 Gbps internal network with a dedicated VLAN for Ceph traffic.

Example: Ceph Deployment

- Deploy MONs:

ceph-deploy new mon1 mon2 mon3

ceph-deploy install mon1 mon2 mon3

ceph-deploy mon create-initial- Deploy OSDs:

ceph-deploy disk zap osd1:/dev/sdb osd2:/dev/sdb osd3:/dev/sdb

ceph-deploy osd create --data /dev/sdb osd1- Deploy MDS (for CephFS):

ceph-deploy mds create mds1- Check Cluster Health:

ceph -sThis example showcases a basic Ceph cluster setup with MON, OSD, and MDS services. Fine-tuning and scaling can be done based on specific use cases and workloads.

This configuration should offer a robust starting point, but scaling and optimizing may require adjusting hardware and software settings depending on the specific needs of your environment.

Recommended Ceph Cluster Configuration Table

Below is a table that outlines the recommended configuration for an optimal Ceph cluster, depending on the size and scale of the deployment:

| Component | Minimum Requirement | Recommended Configuration | Optimal Configuration |

|---|---|---|---|

| CPU per OSD | 2 cores per OSD daemon | 2-4 cores per OSD daemon | 4-6 cores per OSD daemon |

| RAM per OSD | 2 GB per OSD | 4-8 GB per OSD | 8-12 GB per OSD |

| OSD per Node | 4-6 OSDs per node | 6-12 OSDs per node | 12-24 OSDs per node |

| MON Nodes | 3 MON nodes | 3-5 MON nodes | 5 MON nodes with SSD storage |

| MDS Nodes | 2-4 cores, 16 GB RAM | 4 cores, 32 GB RAM | 8 cores, 64 GB RAM for high metadata workloads |

| Network | 1 Gbps per node | 10 Gbps internal network | 10-25 Gbps network with dedicated Ceph VLAN |

| Storage for OSDs | HDD or SSD | SSDs for journaling, HDDs for storage | All-flash SSD arrays for high performance |

| Replication | 3x replication | 3x replication | Erasure coding for better space efficiency |

| Ceph Version | Latest stable release | Latest stable release | Latest stable release |

| Operating System | Linux distribution (Ubuntu, CentOS, RHEL) | Ubuntu 22.04 LTS / CentOS 8 Stream | Latest LTS Linux distribution |

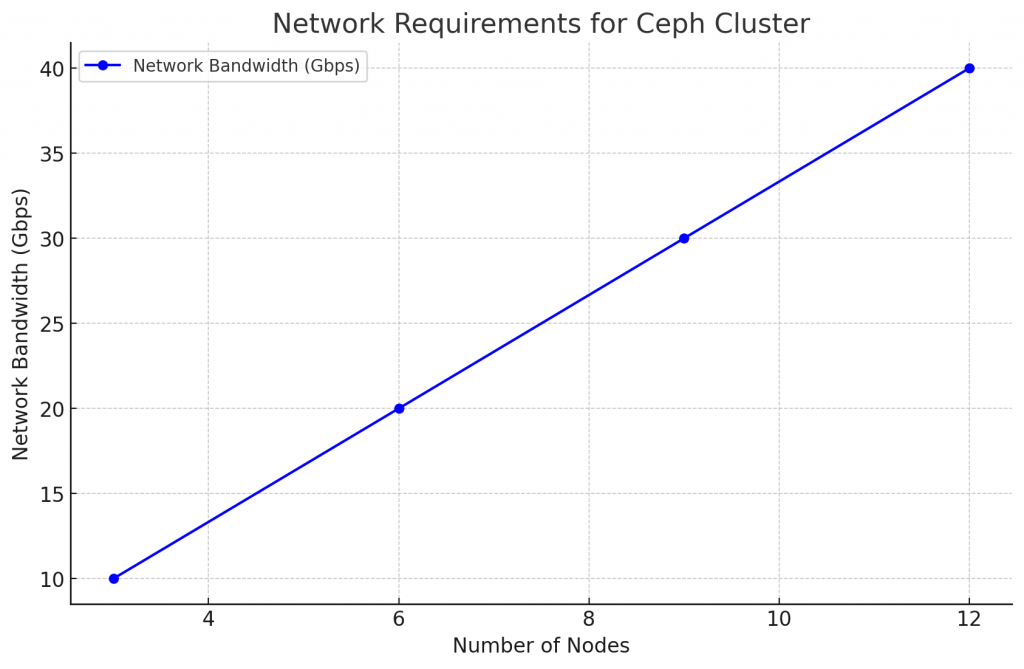

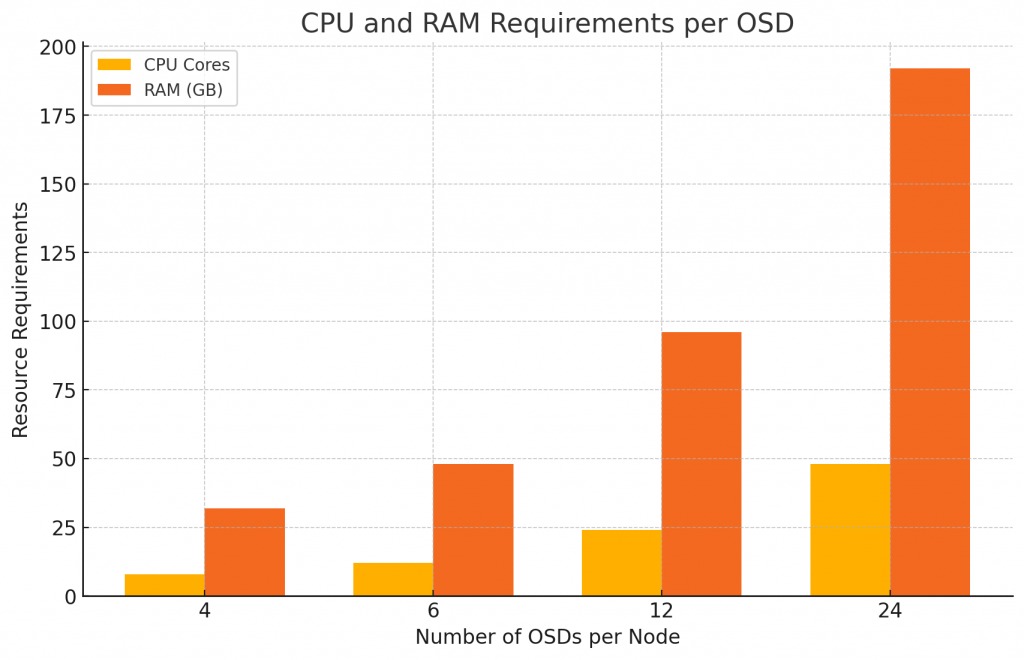

Charts for Optimal Ceph Performance Configuration

Below are descriptions for two charts that help visualize the optimal Ceph configuration.

- CPU and RAM Requirements per OSD (Bar Chart):

- X-axis: Number of OSDs (4, 6, 12, 24)

- Y-axis: Required CPU cores and RAM (in GB)

- The chart shows a bar for the number of CPU cores and another bar for the amount of RAM required for different numbers of OSDs per node.

- Network Requirements (Line Chart):

- X-axis: Number of Nodes

- Y-axis: Required Network Bandwidth (in Gbps)

- The chart illustrates how the network bandwidth requirements increase as the number of nodes in the cluster grows, indicating the need for faster network configurations (1 Gbps, 10 Gbps, and 25 Gbps).